Skip to content

AI-Teaching Assistant

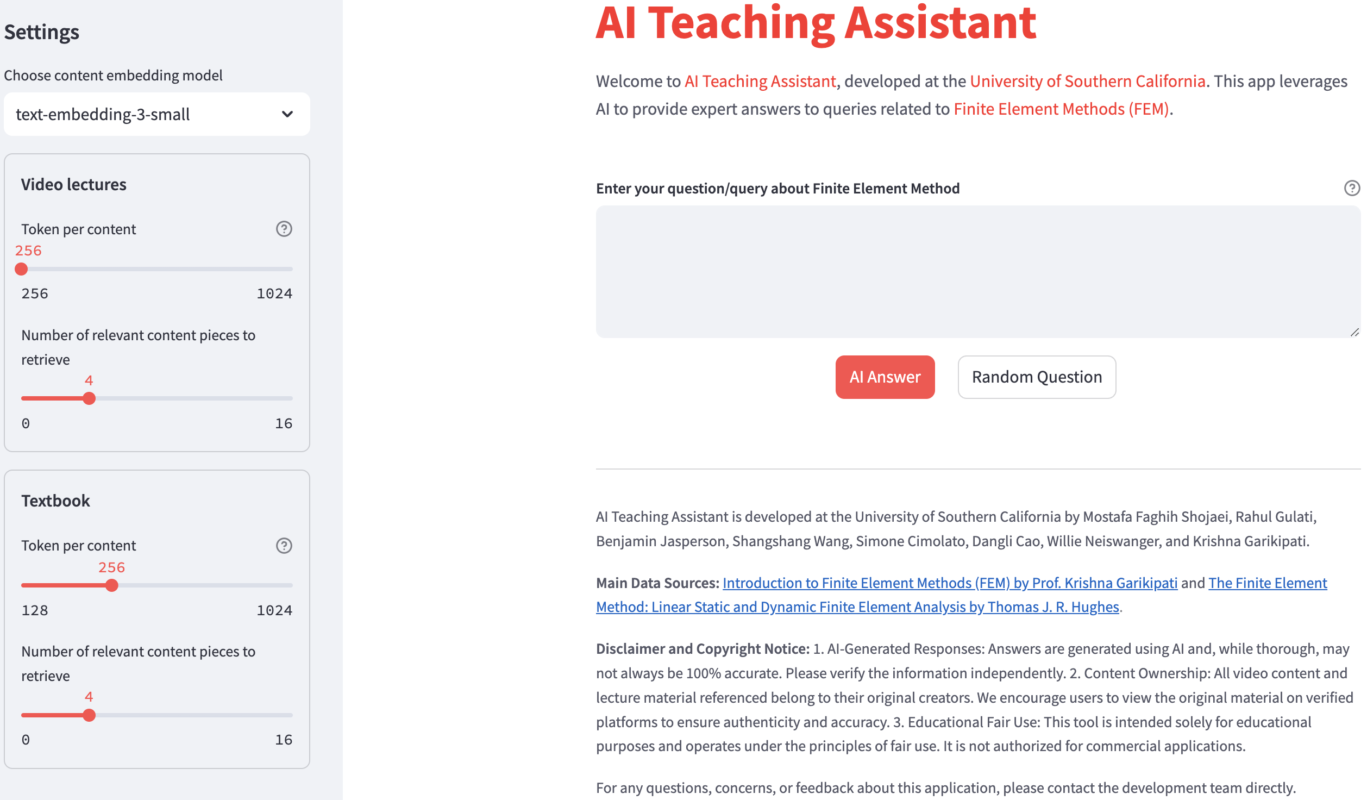

- Developed scripts to scrape structured data from the textbook/ lectures using OpenAI API to obtain training Question-Answer pairs.

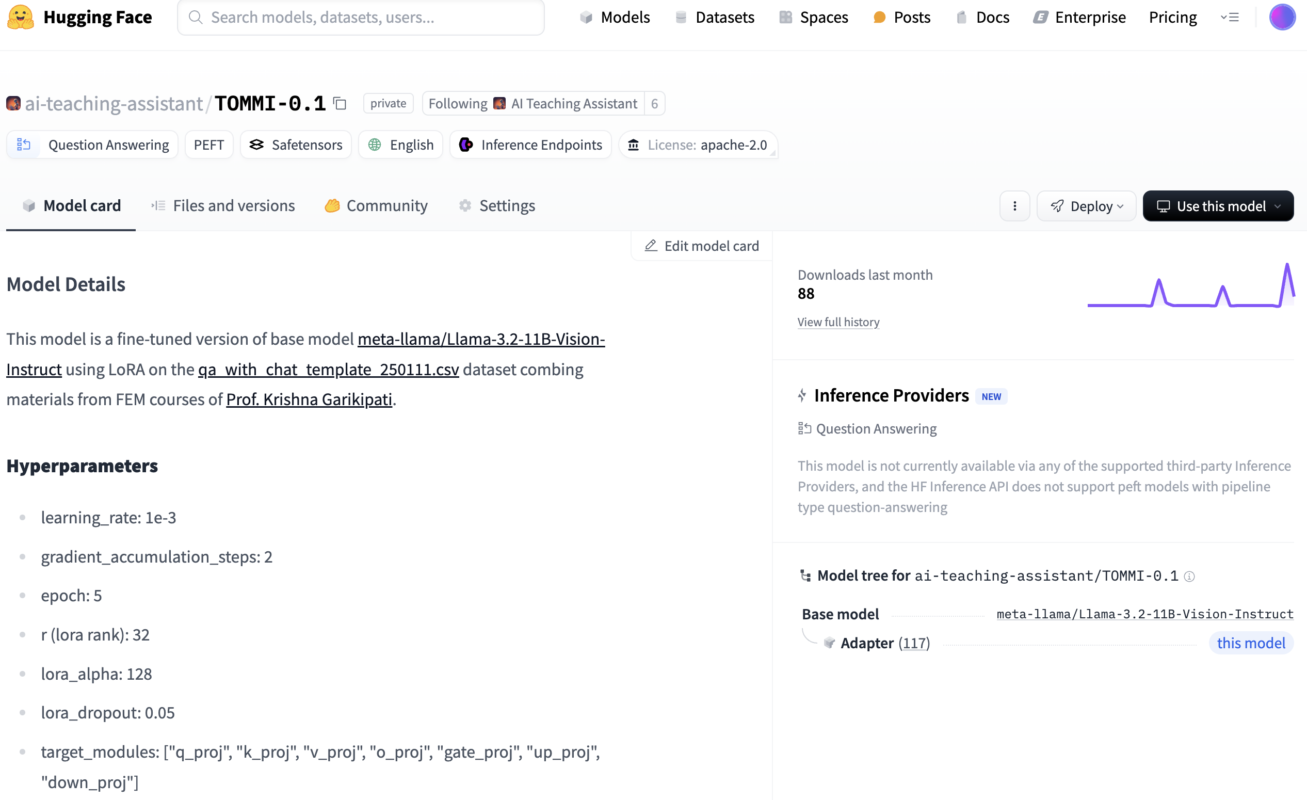

- Fine-tuned Llama 3.2-11B using LoRA to develop a domain specific expert. Carried out hyperparameter optimization using Optuna and WANDB.

- Developed Retrieval-Augmented Generation (RAG) based on several embedding model’s to leverage the fine-tuned multimodal Large Language model (LLM).

- Aimed at improving education with providing a specialized AI assistant for targeted academic support.

- Demo AI-TA available here. Paper submitted to a reputed conference (under review)

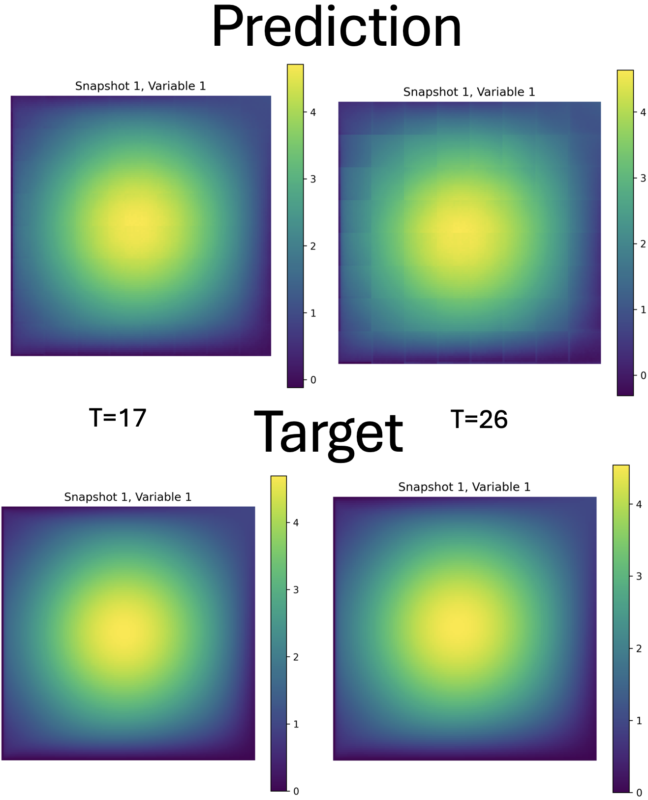

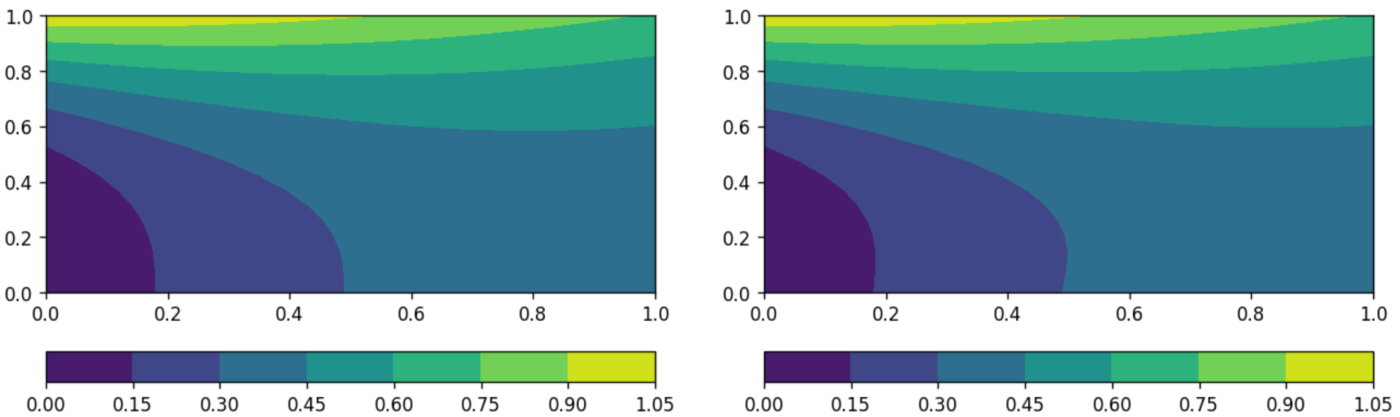

Vision Transformer to learn physics

- Applying Vision Transformers (ViT) to learn physics from simulation images.

- Using generative AI to learn coupled emergent spatiotemporal multiphysics phenomena.

- Link to GitHub repo.

Multimodal transformers

- Developing a Vision Language model (VLM) to explain the physical simulations and its mathematics.

- Integrating fine-tuned Llama 3.2-11B for language processing with a vision transformer coupled via cross attention between LLaMA embeddings and visual tokens.

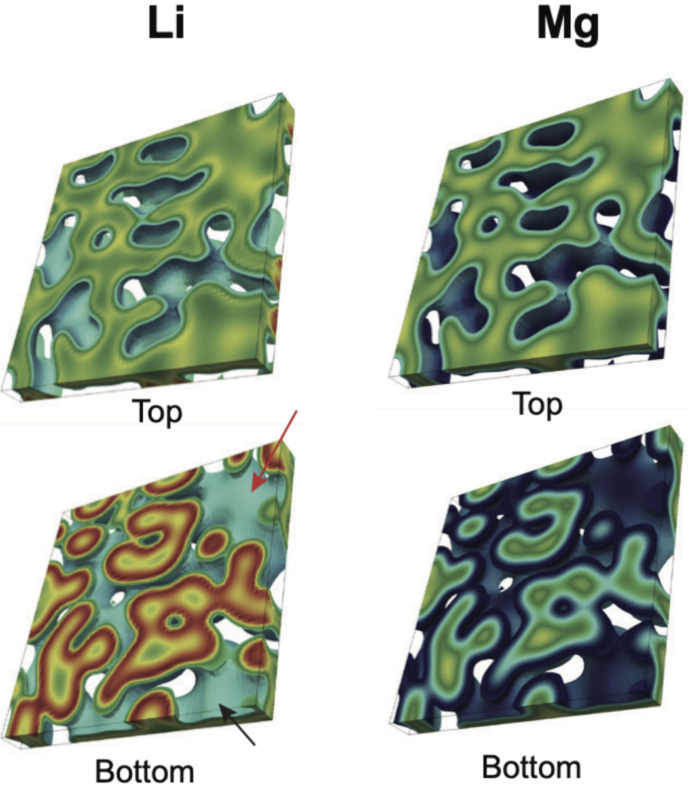

Multiphysics modeling using Finite Element method (FEM)

- Modeling of emergent phenomena involving multiscale/multiphysics interactions using advanced numerical techniques and high-performance scientific computing.

- Developing phenomenological description of the constituent processes, numerical formulation of the governing equations

- Developing computational strategies and parallel code infrastructure for handling multi-million degrees of freedom

- The code framework is in C++ and uses open source libraries like dealii (FE library), PETSc, Trilinos etc.

- Applications include solid-state battery, shell, neurons.

Physics informed neural network (PINNs)

- Used PINNs to approximate the solution of a general type of partial differential equations.

- Implemented using PyTorch and compared the results with numerical solvers based solution.